Storage¶

The storage volumes which are attached to your job are specific to the project within which they are run. ie. All jobs run within one project will see the same files in a specific volume.

Only files saved within a mounted volume are stored permanently. All files stored outside a mounted volume are ephemeral and will be lost when the job ends.

Available storage volumes¶

/project_antwerp¶

On the Antwerp-based clusters (ie. clusters in the range 100-199), a 100TB DDN A3I storage cluster is available under /project_antwerp.

This storage is:

- Persistent: The data is not lost when your job stops

- Shared: You can access it in multiple jobs running in the UA datacenter (=clusters in the range 100-199), and you’ll be able to access the same files.

- Project Specific: Each project has it’s own separate version of this storage.

- Project Private: You can only access the project storage of your own project.

- Large

- Fast

This storage has no size quota at the moment, but it does limit the amount of “inodes”.

This means you can only use a limited amount of files and directories.

There is an automatically generated file named .quota.txt which contains details about your current usage.

The most straight-forward way to mount this storage is:

"storage": [

{

"containerPath": "/project_antwerp"

}

],

This will cause a directory /project_antwerp to be available to you.

If you want this storage to be mounted under /project, you can specify:

"storage": [

{

"hostPath": "/project_antwerp",

"containerPath": "/project"

}

],

/project_scratch¶

The scratch-storage is a fast slave-specific storage, typically backed by SSD’s in RAID0.

This storage is:

- Persistent: The data is not lost when your job stops

- Shared: You can access it in multiple jobs running on the same slave node, and you’ll be able to access the same files.

- Project Specific: Each project has it’s own separate version of this storage.

- Project Private: You can only access the project storage of your own project.

- Large

- Very Fast: local RAID0 SSD’s.

- Breakable: This storage is not backed up, and RAID0 makes it fragile. Only store files here that you can afford to lose.

Consider binding your job to a specific slave with slaveName if you want to access files stored on a specific scratch storage.

The following slaves have a scratch storage available:

slave6A: The HGX-2 in Ghent has a 94TB scratch storage;slave103A: The DGX-2 in Antwerp has a 28TB scratch storage;slave103B: The DGX-1 in Antwerp has a 7TB scratch storage.

Caution

As these storages are backed by a RAID0 disk array, one disk failure can mean the whole storage is corrupted. For example, on the HGX-2 the storage is backed by 16 enterprise SSD’s with a MTBF of 2,000,000 hours.

Do only store files here that you can afford to lose.

To mount the project scratch folder to /project_scratch, you specify it as containerPath:

"storage": [

{

"containerPath": "/project_scratch"

}

],

This will cause a directory /project_scratch to be bound to the local scratch disk inside your docker container.

If you want the scratch to be mounted under /project, you can specify:

"storage": [

{

"hostPath": "/project_scratch",

"containerPath": "/project"

}

],

/project_ghent¶

This storage is:

- Persistent: The data is not lost when your job stops

- Shared: You can access it in multiple jobs running in the iGent datacenter (=clusters in the range 0-99), and you’ll be able to access the same files.

- Project Specific: Each project has it’s own separate version of this storage.

- Project Private: You can only access the project storage of your own project.

- Large

- Fast

Important: This storage is not available for legacy authority users. If you want to migrate data from a legacy authority project to the old /project storage, contact us.

GPULab jobs running on Ghent-based slaves (clusters in the range 0-99) can access a shared storage for projects.

This is a fast storage, connected with a 10Gbit/s link or 100Gbit/s infiniband link depending on the node.

The data is shared instantly, and is thus instantly available everywhere (it’s the same NFS share everywhere).

Quotas are set on this storage. If you run out of space, and need more for a good reason, you can contact us to increase your quota. So don’t use or start new projects just to avoid quota.

Important

We don’t guarantee backups for this storage! You need to keep backups of important files yourself!

If you want this storage to be mounted under /project, you can specify:

"storage": [

{

"hostPath": "/project_ghent",

"containerPath": "/project"

}

],

tmpfs¶

This storage is:

- Not Persistent: The data gets lost when your job stops

- Not Shared: You can only access this storage from a single job

- Project Specific: Each project has it’s own separate version of this storage.

- Project Private: You can only access the project storage of your own project.

- “Small”

- Extremely Fast

On all clusters, you can add temporary memory storage to GPULab jobs. This uses a fixed part of CPU memory (of the node the job runs on) as storage device.

The storage will be empty when the job starts, and is irrevocably lost when the job ends. So there is no persistent storage, and this storage cannot be shared between nodes. The main advantage of tmpfs storage is that it is very fast.

One typical use case is to copy a dataset that needs to be accessed very frequently to tmpfs at the start of the job.

This memory is used in addition to the memory you request for your job. A job with request.resources.cpuMemoryGb set to 6, and a tmpfs storage with sizeGb: 4 will use 10 GB of CPU memory. For bookkeeping, all memory is counted as part of the memory your job uses, so 10GB in the example.

To use tmpfs, you need to specify hostPath, containerPath and sizeGb.

hostPath needs to be "tmpfs". containerPath and sizeGb can be chosen freely.

"storage": [

{

"hostPath": "tmpfs"

"containerPath": "/my_tmp_data"

"sizeGb": 4

}

]

Accessing the storages outside of GPULab¶

In this section, we discuss some options to access the storages from elsewhere.

Access over SFTP¶

GPULab supports SFTP on nearly all jobs. Go to SFTP section of the GPULab CLI for more information.

Syncing files with rsync¶

It is possible to use rsync to upload and/or download files to/from GPULab. It is particularly useful for syncing

large datasets, as it has several mechanisms to speed this up.

rsync must be installed on both your own machine, as in the GPULab job that you’re using to connect to:

# apt update; apt install -y rsync

To connect to a job, first retrieve the correct SSH-command to use via gpulab-cli ssh <job-id> --show:

thijs@ibcn055:~$ gpulab-cli ssh fe8768f5-d270-4ab7-a416-afa28c86511c --show

Short: ssh gpulab-fe8768f5

Full: ssh -i /home/thijs/.ssh/gpulab_cert.pem -oPort=22 5MVJPCLV@n083-09.wall2.ilabt.iminds.be

You can now adapt this command to use with rsync. In the following example the contents of the local folder

dataset is synchronized to the folder /project_ghent/dataset:

thijs@ibcn055:~$ rsync -avz dataset/ gpulab-fe8768f5:/project_ghent/dataset

sending incremental file list

created directory /project_ghent/dataset

./

abc

def

sent 183 bytes received 96 bytes 50.73 bytes/sec

total size is 8 speedup is 0.03

Access from JupytherHub¶

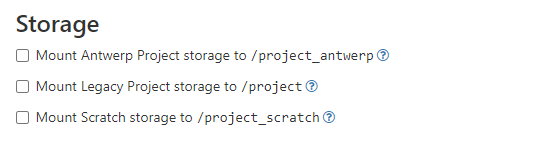

The iLab.t JupytherHub allows you to select which storage you want to mount. JupyterHub will show (one of) the selected storage(s) as the default folder, to prevent accidental data loss.

When using a terminal in JupyterHub, remember to switch to the correct folder to retrieve your files.

cd /project_scratch

Note

In some cases, you might get Invalid response: 403 Forbidden when you try to access your files.

This is because the permissions on /project_ghent have been changed and are too restrictive.

This is typically done by the virtual wall, and might be triggered by other users in your project.

To fix this, open a terminal in jupyterhub, and type:

sudo chmod uog+rwx /project_ghent

# The following sections is commented out as it is not in production yet:

When you start an experiment with new virtual wall 2 (wall2.ilabt.imec.be) resources in the experiment

MyProject, on all your nodes you can find the shared/project_ghentstorage in this directory:/groups/ilabt-imec-be/MyProject/Use the jFed experimenter GUI to reserve a resource, and access the data from that resource. You can find a detailed tutorial on how to do this in the Fed4Fire first experiment tutorial. Note that jFed has basic scp functionality, to make transferring files easier.

New object storage with S3 API (beta)¶

As a beta feature, we have launched a new object storage with the standard S3 API. This storage has currently no backup and is experimental (not many users yet). If you want to try this out, see S3 storage documentation. You can use your existing account that you use for GPULab. We are also interested in your feedback on this at gpulab@ilabt.imec.be.