Network¶

All nodes on the Virtual Wall are connected to the Control Network, which can be used to access the nodes over SSH or RDP. The Control Network is not suitable for performing experiments which require a stable and known link performance. For this, the Virtual Wall provides the ability to dynamically provision VLans on dedicated network interfaces. This provides you with a separate network environment, on which you can also create and change impairment settings like throughput, latency and packet loss.

By default, each node receives a public IPv6 address, but a private IPv4 address on the control network. The new virtual walls have nat enabled. To enable IPv4-access to the public internet on the old virtual wall 1 and 2, you need to enable NAT. It is also possible to request a public IPv4-address (either for a Xen-VM or for a bare metal server) when you need to access your nodes from the public internet. As the number of public IPv4-addresses is limited, please consider using as little addresses as possible. For example: by using port forwarding to host multiple services on the same IP-address.

Accessing the nodes¶

Access through SSH¶

The nodes from the Virtual Wall and w-iLab.t are accessible natively and publicly through IPv6 SSH (based on SSH keys which are installed by the Aggregate Manager). The RSpec manifests return DNS names, and these resolve to IPv6 addresses. This is true for physical hosts and XEN VMs.

If you don’t have native IPv6 address, then you can use the built-in SSH Proxy of jFed. When opening SSH-sessions in jFed, it will then transparently log in through an IPv4 SSH proxy.

Go to jFed preferences, click Run Proxy Test at the bottom right, and then click Always besides Proxy for SSH

connections followed by Save.

Note

This SSH-proxy only works out of the box for accounts on the imec Authority, as we need access to your public SSH key.

I want public internet access on my nodes¶

What do you really want ?

- IPv6 internet access is always available (from the node and to the node)

- If you need to access the IPv4 internet from the node, then it is enabled on the new virtual walls. For the old ones, you can enable NATting for wall 1 or NATting for wall 2

- If you want to access your node from the IPv4 internet, then you need to give the node a public IPv4 address, see

Requesting a public IPv4 address for a XEN VM (or use jFed and set

Routable control IP) or Requesting and configuring public IPv4 addresses on Bare Metal Servers if you need a public IPv4 address for a raw-pc (bare metal PC) or if you need multiple IPs, e.g. for creating your own cloud. - An alternative if you only need to open up a couple of ports to the internet, is using Port forwarding

Getting NATted IPv4 access to the internet on w-iLab.t¶

For w-iLab.t, this is enabled by default.

Getting NATted IPv4 access to the internet on the old Virtual Wall 1¶

Quick method (same for vwall1 and vwall2, physical and virtual machines)

wget -O - -nv https://www.wall2.ilabt.iminds.be/enable-nat.sh | sudo bash

If you encounter problems with SSL, try:

wget -O - -nv --ciphers DEFAULT@SECLEVEL=1 https://www.wall2.ilabt.iminds.be/enable-nat.sh | sudo bash

Manual method:

For physical machines on the Virtual Wall 1, use the following route changes:

sudo route del default gw 10.2.15.254 ; sudo route add default gw 10.2.15.253

sudo route add -net 10.11.0.0 netmask 255.255.0.0 gw 10.2.15.254

sudo route add -net 10.2.32.0 netmask 255.255.240.0 gw 10.2.15.254

If you are connected through iGent VPN, you can also add these routes, in order enable direct access (ssh, scp):

sudo route add -net 157.193.214.0 netmask 255.255.255.0 gw 10.2.15.254

sudo route add -net 157.193.215.0 netmask 255.255.255.0 gw 10.2.15.254

sudo route add -net 157.193.135.0 netmask 255.255.255.0 gw 10.2.15.254

sudo route add -net 192.168.126.0 netmask 255.255.255.0 gw 10.2.15.254

sudo route add -net 192.168.124.0 netmask 255.255.255.0 gw 10.2.15.254

For VMs on the Virtual Wall 1, use the following route changes:

sudo route add -net 10.2.0.0 netmask 255.255.240.0 gw 172.16.0.1

sudo route del default gw 172.16.0.1 ; sudo route add default gw 172.16.0.2

If you are connected through iGent VPN, you can also add these routes, in order enable direct access (ssh, scp):

sudo route add -net 157.193.214.0 netmask 255.255.255.0 gw 172.16.0.1

sudo route add -net 157.193.215.0 netmask 255.255.255.0 gw 172.16.0.1

sudo route add -net 157.193.135.0 netmask 255.255.255.0 gw 172.16.0.1

sudo route add -net 192.168.126.0 netmask 255.255.255.0 gw 172.16.0.1

sudo route add -net 192.168.124.0 netmask 255.255.255.0 gw 172.16.0.1

Note that these IP are NOT saved to any config file. So in case of a reboot, they will be lost. The quick method mentioned above does store these changes in config files on debian and ubuntu.

Getting NATted IPv4 access to the internet on the old Virtual Wall 2¶

Quick method (same for vwall1 and vwall2, physical and virtual machines)

wget -O - -nv https://www.wall2.ilabt.iminds.be/enable-nat.sh | sudo bash

If you encounter problems with SSL, try:

wget -O - -nv --ciphers DEFAULT@SECLEVEL=1 https://www.wall2.ilabt.iminds.be/enable-nat.sh | sudo bash

Manual method:

For physical machines on the Virtual Wall 2, use the following route changes:

sudo route del default gw 10.2.47.254 ; sudo route add default gw 10.2.47.253

sudo route add -net 10.11.0.0 netmask 255.255.0.0 gw 10.2.47.254

sudo route add -net 10.2.0.0 netmask 255.255.240.0 gw 10.2.47.254

If you are connected through iGent VPN, you can also add these routes, in order enable direct access (ssh, scp):

sudo route add -net 157.193.214.0 netmask 255.255.255.0 gw 10.2.47.254

sudo route add -net 157.193.215.0 netmask 255.255.255.0 gw 10.2.47.254

sudo route add -net 157.193.135.0 netmask 255.255.255.0 gw 10.2.47.254

sudo route add -net 192.168.126.0 netmask 255.255.255.0 gw 10.2.47.254

sudo route add -net 192.168.124.0 netmask 255.255.255.0 gw 10.2.47.254

For VMs on the Virtual Wall 2, use the following route changes:

sudo route add -net 10.2.32.0 netmask 255.255.240.0 gw 172.16.0.1

sudo route del default gw 172.16.0.1 ; sudo route add default gw 172.16.0.2

If you are connected through iGent VPN, you can also add these routes, in order enable direct access (ssh, scp):

sudo route add -net 157.193.214.0 netmask 255.255.255.0 gw 172.16.0.1

sudo route add -net 157.193.215.0 netmask 255.255.255.0 gw 172.16.0.1

sudo route add -net 157.193.135.0 netmask 255.255.255.0 gw 172.16.0.1

sudo route add -net 192.168.126.0 netmask 255.255.255.0 gw 172.16.0.1

sudo route add -net 192.168.124.0 netmask 255.255.255.0 gw 172.16.0.1

Note that these IP are NOT saved to any config file. So in case of a reboot, they will be lost. The quick method mentioned above does store these changes in config files on Debian and Ubuntu.

Requesting a public IPv4 address for a XEN VM¶

All VMs at the Virtual Wall have a public IPv6 address and a private IPv4 address. However, it is possible to ask a public IPv4 address as well. Either by:

- Checking the Routable Control IP-checkbox in the Node Properties-dialog after you have dragged in the Xen VM in jFed

- Editing the RSpec directly as follows (see

routable_control_ip).

1 2 3 4 5 6 7 8 9 10 11 12 | <?xml version="1.0" encoding="UTF-8" standalone="yes"?>

<rspec type="request"

xsi:schemaLocation="http://www.geni.net/resources/rspec/3 http://www.geni.net/resources/rspec/3/request.xsd"

xmlns="http://www.geni.net/resources/rspec/3" xmlns:jFed="http://jfed.iminds.be/rspec/ext/jfed/1"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xmlns:emulab="http://www.protogeni.net/resources/rspec/ext/emulab/1">

<node client_id="node0" exclusive="false"

component_manager_id="urn:publicid:IDN+wall2.ilabt.iminds.be+authority+cm">

<sliver_type name="emulab-xen"/>

<emulab:routable_control_ip />

</node>

</rspec>

|

Requesting and configuring public IPv4 addresses on Bare Metal Servers¶

All Virtual Wall machines have a public IPv6 address and a private IPv4 address. As shown above, you can ask a XEN VM with a public IPv4 control interface. It is however also possible to ask for IPv4 addresses as a resource. This makes it possible to use then that IP address e.g. to make a bare metal (raw-pc) publicly reachable over IPv4, or to build your own cloud with a number of public IPv4 addresses. After requesting the IPv4-address, you need to manually configure it on one of your bare metal servers.

Requesting a public IPv4-address¶

Requesting a public IPv4-address via jFed

- Drag in an

Address Poolinto your experiment design - Double-click on the new node and choose the correct testbed in which you want to request the public IP-addresses and the number of IP-addresses that you need.

Requesting a public IPv4-address by editing the RSpec

This goes as follows, for asking 1 address (pay attention that the routable_pool tag is NOT inside a node tag, it is just a separate resource):

1 2 3 4 5 6 7 8 9 10 11 12 | <?xml version='1.0'?>

<rspec type="request"

xsi:schemaLocation="http://www.geni.net/resources/rspec/3 http://www.geni.net/resources/rspec/3/request.xsd"

xmlns="http://www.geni.net/resources/rspec/3" xmlns:jFed="http://jfed.iminds.be/rspec/ext/jfed/1"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xmlns:emulab="http://www.protogeni.net/resources/rspec/ext/emulab/1">

<node client_id="node0" exclusive="true" component_manager_id="urn:publicid:IDN+wall2.ilabt.iminds.be+authority+cm">

<sliver_type name="raw-pc"/>

</node>

<emulab:routable_pool client_id="foo" count="1"

component_manager_id="urn:publicid:IDN+wall2.ilabt.iminds.be+authority+cm" type="any"/>

</rspec>

|

When the request was successful, the manifest will contain:

<emulab:routable_pool client_id="foo"

component_manager_id="urn:publicid:IDN+wall2.ilabt.iminds.be+authority+cm"

count="1" type="any">

<emulab:ipv4 address="193.190.127.234" netmask="255.255.255.192"/>

</emulab:routable_pool>

so you know the IP address that has been assigned.

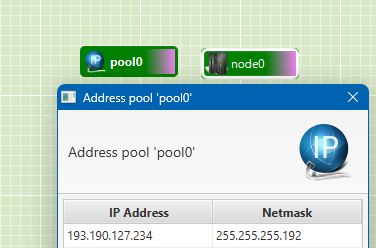

You can retrieve it in jFed by double clicking on the address pool:

Configuring a public IPv4-address on a Bare Metal Server¶

If you want to assign the IP address to the node, then execute following steps as root:

Quick Method (note: the manual method is more stable):

wget -O - -q https://www.wall2.ilabt.iminds.be/enable-public-ipv4.sh | sudo bash

This will automatically detect the address in the address pool, if it is in the same experiment. If it is not in the same experiment, or you have more than one address in the pool, you can specify the chosen IP to the script:

wget -q https://www.wall2.ilabt.iminds.be/enable-public-ipv4.sh

chmod u+x enable-public-ipv4.sh

./enable-public-ipv4.sh 193.190.xxx.yyy

If you encounter problems with SSL, try:

wget -O - -nv --ciphers DEFAULT@SECLEVEL=1 https://www.wall2.ilabt.iminds.be/enable-public-ipv4.sh | sudo bash

Manual Method:

You will need the following information:

- the-ip-from-manifest: see previous paragraph on how to request an external IP and retrieve it

- interface name: use

ip ato retrieve the interface on which the control network is connected. We assume it is eth0 in the commands below.

For Wall 1:

sudo su

modprobe 8021q

vconfig add eth0 28 # note: eth0 is assumed to be the control interface, this can be another name as well.

ifconfig eth0.28 the-ip-from-manifest netmask 255.255.255.192

route del default ; route add default gw 193.190.127.129

For Wall 2:

sudo su

modprobe 8021q

vconfig add eth0 29 # note: eth0 is assumed to be the control interface, this can be another name as well.

ifconfig eth0.29 the-ip-from-manifest netmask 255.255.255.192

route del default ; route add default gw 193.190.127.193

When connected over IPv4 to your server, these commands will cause you to lose connectivity to the node, as the route from the private IPv4-address has been erased. You must now reconnect to the server by using the public IPv4-address. We recommend using an IPv6 connection to the node to prevent connectivity interruptions. (You can use the built-in jFed proxy for this).

Tip: Permanently configuring a Public IPv4-address

On Debian and Ubuntu 18.04 or earlier, you can make this config survive reboot by first executing:

echo 8021q | sudo tee -a /etc/modules

And then adding a section at the bottom of /etc/network/interfaces. Make sure to replace eth0 with the correct name for the control interface.

For wall1:

auto eth0.28

iface eth0.28 inet static

address <the-ip-from-manifest>

netmask 255.255.255.192

gateway 193.190.127.129

vlan-raw-device eth0

pre-up route del default gw 10.2.15.254

post-down route add default gw 10.2.15.254

For wall2:

auto eth0.29

iface eth0.29 inet static

address <the-ip-from-manifest>

netmask 255.255.255.192

gateway 193.190.127.193

vlan-raw-device eth0

pre-up route del default gw 10.2.47.254

post-down route add default gw 10.2.47.254

Port forwarding with ssh tunnel¶

All nodes have by default a public IPv6 address and a private IPv4 address. If you don’t have IPv6, then jFed works around this, by using a proxy for ssh login. However, if you want to access e.g. webinterfaces of your software, you can use the following method.

On Virtual wall 1 and 2, you can use XEN VMs with a public IPv4 address (right click the node in jFed and select

Routable control IP). These XEN VMs can then be accessed freely on IPv4. As such you can use them to port forward

particular services from IPv6 to IPv4.

Suppose you have a webserver running on a machine on the testbed listening on port 80, IPv6 only, e.g. n091-07.wall2.ilabt.iminds.be.

You have a XEN VM running with a public IPv4 (can be the same experiment or another one). IP address is e.g. 193.190.127.234.

Log in on the XEN VM, and do the following:

sudo apt-get update

sudo apt-get install screen

screen (this makes it permanent, also if the terminal stops, use CTRL-A, D to get out of this screen while keeping it running)

ssh -L 8080:n091-07.wall2.ilabt.iminds.be:80 -g localhost

Now you can surf to http://193.190.127.234:8080 and you see what happens at n091-07.wall2.ilabt.iminds.be port 80.

Port forwarding with iptables¶

Same situation as above. You have a virtual wall node with public IPv4 193.190.127.234 and want to forward that to port 80 of n091-07:

sudo echo 1 > /proc/sys/net/ipv4/ip_forward

iptables -t nat -A PREROUTING -i eno1.29 -p tcp --dport 8080 -j DNAT --to-destination n091-07.wall2.ilabt.iminds.be:80

iptables -t nat -A POSTROUTING -d n091-07.wall2.ilabt.iminds.be -j SNAT --to 193.190.127.234

The last POSTROUTING is not always needed, depending on the routing on n091-07 and your virtual wall node.

Now you can surf to http://193.190.127.234:8080 and you see what happens at n091-07.wall2.ilabt.iminds.be port 80.

Sharing a LAN between experiments¶

A LAN can be shared between different experiments.

Behind the scenes, this is implemented by assigning the same VLAN to links in the different experiments. From the perspective of the experiments, this detail is hidden, and it just looks like all nodes on the link are connected to the same switch.

Not that this is a layer 2 link, so the IP addresses of nodes connected to the shared links in both experiments, must be on the same subnet, and must all be unique in that subnet.

To use this feature, first a LAN in an existing experiment must be shared. Then, additional experiments can be started with a connection to this LAN.

Step by step instructions:

1. Create an experiment (e.g. 2 nodes) with a LAN that you want to share, and run it. Once it is running (in jFed) and completely ready, right click on a LAN you want to share, and select “Share/Unshare LAN”. In the dialog, give the LAN a unique name. Anyone who knows this name will be able to connect links to the LAN, in the second step.

2. Create an experiment (but do not run it yet) that includes a LAN that should be connected to the shared LAN created in step 1. This experiment has to be on the same testbed. (So you cannot use this feature to share LANs between Virtual Wall 2 and Virtual Wall 1). Make sure that the IP adresses of the nodes connected to the LAN to be shared are in the same subnet that is used on the shared LAN. In this experiment, you can right click the LAN when you design the experiment, go to configure link, Link type, and then check ‘Shared Lan’ and type in the same name you gave in 1.

In the RSpec, the following link_shared_vlan tag will be added:

<link client_id="link2">

<component_manager name="urn:publicid:IDN+wall2.ilabt.iminds.be+authority+cm"/>

<link_type name="lan"/>

<sharedvlan:link_shared_vlan name="shared_lan_mylanname"/>

</link>

Run the experiment, and the LANs will be connected. This second step can be repeated with other experiments if needed.

Note that when the first expiriment is terminated, or the “Unshare LAN” feature is used in the first experiment, All experiments using the shared LAN will be disconnected from it.

Openflow¶

Interfaces of some nodes of the Virtual Wall which are not connected to the big VLAN switch, are connected to a PICA8 P-3290 Openflow switch. The switch is connected to a FlowVisor, which is being programmed by a Foam Aggregate Manager.

The nice thing about the Virtual Wall, is that you can combine topologies on the Virtual Wall itself (with Open VSwitch) with this PICA8 switch.

Port connections¶

The following nodes are connected to the Openflow switch with (node name and port on switch):

n063-13a 1

n063-13b 2

n063-14a 3

n063-14b 4okee perfec

n063-16b 8

n063-17a 9

n063-17b 10

n063-18a 11

n063-18b 12

n063-19a 13

n063-19b 14

n063-20a 15

n063-20b 16

n063-21a 17

n063-21b 18

n061-13a 48

n061-13b 47

n061-14a 46

n061-14b 45

n061-15a 44

n061-15b 43

n061-16a 42

n061-16b 41

n061-17a 40

n061-17b 39

n061-18a 38

n061-18b 37

n061-19a 36

n061-19b 35

n061-20a 34

n061-20b 33

n061-21a 32

n061-21b 31

Foam¶

Foam can be accessed at https://foam.atlantis.ugent.be:3626/foam/gapi/2

Openflow Request RSpec¶

jFed can load these RSpecs, as shown in jFed openflow support . An example Rspec (you can run your Openflow Controllers on Virtual Wall nodes and then specify those IP addresses in this RSpec):

<?xml version='1.0'?>

<rspec xmlns="http://www.geni.net/resources/rspec/3" type="request" generated_by="jFed RSpec Editor" generated="2016-07-13T05:36:43.775+02:00" xmlns:emulab="http://www.protogeni.net/resources/rspec/ext/emulab/1" xmlns:jfedBonfire="http://jfed.iminds.be/rspec/ext/jfed-bonfire/1" xmlns:delay="http://www.protogeni.net/resources/rspec/ext/delay/1" xmlns:jfed-command="http://jfed.iminds.be/rspec/ext/jfed-command/1" xmlns:client="http://www.protogeni.net/resources/rspec/ext/client/1" xmlns:jfed-ssh-keys="http://jfed.iminds.be/rspec/ext/jfed-ssh-keys/1" xmlns:jfed="http://jfed.iminds.be/rspec/ext/jfed/1" xmlns:xs="http://www.w3.org/2001/XMLSchema-instance" xmlns:openflow="http://www.geni.net/resources/rspec/ext/openflow/3" xmlns:sharedvlan="http://www.protogeni.net/resources/rspec/ext/shared-vlan/1" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xsi:schemaLocation="http://www.geni.net/resources/rspec/3 http://www.geni.net/resources/rspec/3/request.xsd http://www.geni.net/resources/rspec/ext/openflow/3 http://www.geni.net/resources/rspec/ext/openflow/3/of-resv.xsd">

<openflow:sliver description="bvermeul's experiment." email="brecht.vermeulen@ugent.be" ref="http://jfed.iminds.be">

<openflow:controller url="tcp:10.2.0.127:6633" type="primary"/>

<openflow:group name="johngrp">

<openflow:datapath component_id="urn:publicid:IDN+openflow:foam:foam.atlantis.ugent.be+datapath+5e:3e:08:9e:01:61:64:cc" component_manager_id="urn:publicid:IDN+openflow:foam:foam.atlantis.ugent.be+authority+am">

<openflow:port num="1"/>

<openflow:port num="2"/>

<openflow:port num="3"/>

</openflow:datapath>

</openflow:group>

<openflow:match>

<openflow:use-group name="johngrp"/>

<openflow:packet>

<openflow:dl_type value="0x800,0x806"/>

<openflow:nw_src value="192.168.3.0/24" />

<openflow:nw_dst value="192.168.3.0/24" />

</openflow:packet>

</openflow:match>

</openflow:sliver>

</rspec>

Link impairment¶

The Virtual Wall testbeds support the automated setup of impairment parameters on links, with which you can influence the behaviour of a link in the following ways:

- a fixed delay

- a fixed bandwidth limitation

- random packet loss

This impairment is useful for a lot of scenarios. But in some experiments, you need to exactly control the impairment in your experiment. In that case, you’re better of with manually setting up impairments.

Automated impairment: Two nodes with an impaired link in between¶

Under the hood, this method will configure tc on the Linux nodes at each end of the link.

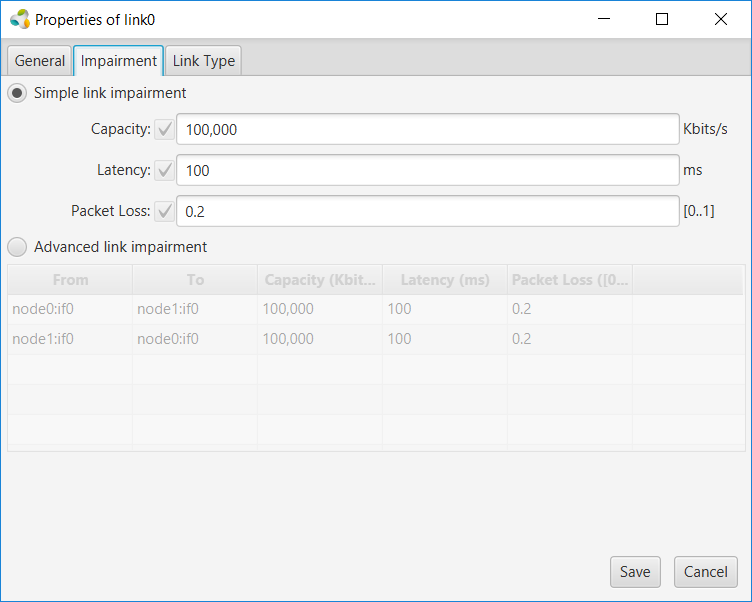

After drawing a link between two nodes in jFed, you can add this impairment by double-clicking on the link, and changing the values in the ‘Link Impairment’-tab:

This results in an RSpec with link-impairment configured in the <link>-tag. An example:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 | <?xml version='1.0'?>

<rspec xmlns="http://www.geni.net/resources/rspec/3" type="request" generated_by="jFed RSpec Editor" generated="2018-12-12T16:03:17.694+01:00" xmlns:emulab="http://www.protogeni.net/resources/rspec/ext/emulab/1" xmlns:delay="http://www.protogeni.net/resources/rspec/ext/delay/1" xmlns:jfed-command="http://jfed.iminds.be/rspec/ext/jfed-command/1" xmlns:client="http://www.protogeni.net/resources/rspec/ext/client/1" xmlns:jfed-ssh-keys="http://jfed.iminds.be/rspec/ext/jfed-ssh-keys/1" xmlns:jfed="http://jfed.iminds.be/rspec/ext/jfed/1" xmlns:sharedvlan="http://www.protogeni.net/resources/rspec/ext/shared-vlan/1" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xsi:schemaLocation="http://www.geni.net/resources/rspec/3 http://www.geni.net/resources/rspec/3/request.xsd ">

<node client_id="node0" exclusive="true" component_manager_id="urn:publicid:IDN+wall2.ilabt.iminds.be+authority+cm">

<sliver_type name="raw-pc"/>

<location xmlns="http://jfed.iminds.be/rspec/ext/jfed/1" x="340.8" y="104.0"/>

<interface client_id="node0:if0">

<ip address="192.168.0.1" netmask="255.255.255.0" type="ipv4"/>

</interface>

</node>

<node client_id="node1" exclusive="true" component_manager_id="urn:publicid:IDN+wall2.ilabt.iminds.be+authority+cm">

<sliver_type name="raw-pc"/>

<location xmlns="http://jfed.iminds.be/rspec/ext/jfed/1" x="343.2" y="278.4"/>

<interface client_id="node1:if0">

<ip address="192.168.0.2" netmask="255.255.255.0" type="ipv4"/>

</interface>

</node>

<link client_id="link0">

<component_manager name="urn:publicid:IDN+wall2.ilabt.iminds.be+authority+cm"/>

<interface_ref client_id="node0:if0"/>

<interface_ref client_id="node1:if0"/>

<link_type name="lan"/>

<property source_id="node0:if0" dest_id="node1:if0" capacity="100000" latency="100" packet_loss="0.2"/>

<property source_id="node1:if0" dest_id="node0:if0" capacity="100000" latency="100" packet_loss="0.2"/>

</link>

</rspec>

|

Changing the impairment¶

You can change this impairment by running tc commands on each involved node.

After starting the experiment with impairment, you can check the current impairment by running tc qdisc show:

qdisc htb 130: dev eth5 root refcnt 9 r2q 10 default 1 direct_packets_stat 0 direct_qlen 1000

qdisc netem 120: dev eth5 parent 130:1 limit 1000 delay 200.0ms

This shows the current impariment (bandwidth, latency and packet loss). The commands that the testbed uses can be found on the node in

/var/emulab/boot/rc.linkdelay. The above has been installed by issuing:

/sbin/tc qdisc del dev eth5 root

/sbin/tc qdisc del dev eth5 ingress

/sbin/tc qdisc add dev eth5 handle 130 root htb default 1

/sbin/tc class add dev eth5 classid 130:1 parent 130 htb rate 10000000 ceil 10000000

/sbin/tc qdisc add dev eth5 handle 120 parent 130:1 netem drop 0 delay 200000us

This installs a 10Mb/s bandwidth limit and 200ms one-way latency (0% packet loss). The other node can have a similar or different delay.

You can find more examples of using tc and netem at http://www.linuxfoundation.org/collaborate/workgroups/networking/netem.

Automatic impairment: Two nodes with an impairment bridge node in between (deprecated)¶

Warning

This method is no longer recommended due to limited compatability with recent hardware. We might remove support for it completely in the future!

When you want to emulate the link between two nodes, you can put an extra bridge node in between, and define the packet loss, latency and bandwidth for each direction of the link. You have to specify IP addresses for the links.

Under the hood, this method adds an extra node between the 2 nodes, and configures it as an impairment bridge.

However, this bridge node uses ipfw on FreeBSD 8.2, an old FreeBSD version that is no longer working on all virtual wall nodes.

This method also has always had bad performance for higher bandwidths.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 | <?xml version="1.0" encoding="UTF-8" standalone="yes"?>

<rspec generated="2014-02-01T16:47:22.736+01:00" generated_by="Experimental jFed Rspec Editor" type="request"

xsi:schemaLocation="http://www.geni.net/resources/rspec/3 http://www.geni.net/resources/rspec/3/request.xsd http://www.protogeni.net/resources/rspec/ext/delay/1 http://www.protogeni.net/resources/rspec/ext/delay/1/request.xsd"

xmlns="http://www.geni.net/resources/rspec/3" xmlns:delay="http://www.protogeni.net/resources/rspec/ext/delay/1"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance">

<node client_id="node0" component_manager_id="urn:publicid:IDN+wall2.ilabt.iminds.be+authority+cm" exclusive="true">

<sliver_type name="raw-pc"/>

<interface client_id="node0:if0">

<ip address="10.0.1.1" netmask="255.255.255.0" type="ipv4"/>

</interface>

</node>

<node client_id="node1" component_manager_id="urn:publicid:IDN+wall2.ilabt.iminds.be+authority+cm" exclusive="true">

<sliver_type name="raw-pc"/>

<interface client_id="node1:if0">

<ip address="10.0.1.2" netmask="255.255.255.0" type="ipv4"/>

</interface>

</node>

<node client_id="bridge" component_manager_id="urn:publicid:IDN+wall2.ilabt.iminds.be+authority+cm"

exclusive="true">

<sliver_type name="delay">

<delay:sliver_type_shaping xmlns="http://www.protogeni.net/resources/rspec/ext/delay/1">

<pipe source="delay:left" dest="delay:right" capacity="1000" latency="50"/>

<pipe source="delay:right" dest="delay:left" capacity="10000" latency="25" packet_loss="0.01"/>

</delay:sliver_type_shaping>

</sliver_type>

<interface client_id="delay:left"/>

<interface client_id="delay:right"/>

</node>

<link client_id="link1">

<interface_ref client_id="node0:if0"/>

<interface_ref client_id="delay:left"/>

<property source_id="delay:left" dest_id="node0:if0"/>

<property source_id="node0:if0" dest_id="delay:left"/>

<link_type name="lan"/>

</link>

<link client_id="link2">

<interface_ref client_id="node1:if0"/>

<interface_ref client_id="delay:right"/>

<property source_id="delay:right" dest_id="node1:if0"/>

<property source_id="node1:if0" dest_id="delay:right"/>

<link_type name="lan"/>

</link>

</rspec>

|

Manual impairment¶

If you need full control of the impairment, you’re better off setting up the impairment manually.

Some methods you could use:

- Use

tcto manually setup an impairment on each node. (Or any other software.) - Add an extra node that you manually configure as an impairment bridge. You could for example use a Linux node and use

brctlandtc. - Consider using openflow to create the impairment bridge. You could use mininet or another software openflow solution, or you could use the virtual wall openflow hardware

- If you’re feeling brave, you could try to get the Click Modular Router to work on the virtual wall. (This was our prefered solution in days before the virtual wall, and it seems to still be around, and again actively developed.)

Using 10GB links¶

Some nodes have 10GB/s interfaces and are connected to a 10GB/s capable switch. To use these 10GB/s links, in jFed, you need to:

- Drag in 2 nodes of type “Physical node”, and double click to configure them:

- Select testbed “imec Virtual Wall 2”

- For “Node:”, select “Specific Node:”

- In the dropdown below “Specific Node:”, choose a node with a name matching

n065-xx(wherexxis a number)

- Draw a link between these nodes

- Run the experiment

If this doesn’t work as expected, contact us. (These instructions might be out of date.)